First a quick run down of basic terms:

LLM (Large Language Model)

A Large Language Model is a type of AI trained on huge amounts of text data to understand and generate human-like language. Think of it like a super-smart-arse. It can write essays, answer questions, summarise information, and even hold conversations. GPT-4 and Gemini are LLM

Multimodal

A multimodal AI can understand and generate more than just text. It might handle images, audio, video, or a mix of these. For example, an AI that can look at a picture and describe it in words, or generate an image from a text prompt, is multimodal.

Prompt Engineering

Prompt engineering is the art and science of crafting your input. I talk about witchcraft spells in my posts. A decent prompt gets better or more specific results from an AI. Since LLMs respond differently based on how you phrase things, tweaking your prompt can make a big difference. It’s almost like learning to speak the AI’s language.

Training Data

Training data is the information used to teach an AI. For LLMs, this usually means a giant collection of books, articles, websites, and sometimes code or conversations. The more and better data an AI trains on, the smarter and more versatile it gets.

Tokens

Tokens are chunks of text, usually words or parts of words, that AI models process at a time. Everything you type gets broken down into tokens. Models have limits on how many tokens they can handle in one go, which affects how long an answer or conversation can be.

AI Hallucinations

AI hallucinations happen when a model confidently makes up information that isn’t true or wasn’t in its training data. For example, it might invent fake statistics or cite nonexistent books. This is one of the biggest challenges in current AI systems.

AI Agents

An AI agent is a system that can take actions on its own, not just generate text. Agents might plan steps to solve a problem, use tools, or even interact with other software or the web. Instead of just answering a question, an agent could book a flight, scrape websites, or manage your calendar.

Closed Source & Open Source

- Closed Source means the code (and often the data) behind an AI or software isn’t shared with the public. Only the company or creators can see, change, or use it fully.

- Open Source means the code is available to anyone. You can look at it, modify it, and use it as you wish (within license rules). Open-source AI is important for transparency and community innovation.

These are some of the digital assets I’ve used during my MA

ChatBox AI

ChatBox AI uses large language models (LLMs) to power its chatbot experiences, usually based on closed-source models, though some platforms allow for limited customisation.

It’s not truly multimodal, mostly text-based, but as with all current AI models you need decent prompt engineering, ‘shit in shit out’ is a good mantra.

Have a conversation with it! apparently The Samaritans are finding their services being replaced by this type of chat based model.

The platform does well with token management, but token limits can affect how long or complex interactions can be. Hallucinations (AI making up facts) can happen, especially in nuanced conversations. Training data is proprietary, and you won’t have much control over fine-tuning. Generally, the platform is not open-source, focusing on simplicity over deep customisation or agentic behavior. Its a good platform to understand the VOX-POP of chat AI.

Perplexity

Perplexity uses advanced LLMs (like OpenAI’s GPT-4) to cobble together answers from the web. Prompt engineering matters since the phrasing of your question can change the quality of the answer. It’s unimodal (text only), and you don’t directly manage tokens, but the backend does. Perplexity’s answers can hallucinate, though web citations help mitigate this. It isn’t agent-based, but it responds to queries but doesn’t take multi-step actions. The backend is closed-source, and the training data is broad but not user-configurable.

Lightroom

Lightroom wasn’t built around LLMs or prompt-based interfaces; it’s a photo editing tool. Adobe has started to add some AI powered features which I’ve enjoyed using. Its always a game of catch up for tech companies. It’s multimodal in the sense that it works with images and metadata, but it is developing its prompt engineering. there are no AI agents involved. The AI components are closed-source, trained on Adobe’s internal image datasets. There’s little risk of hallucination since it’s not generating content the way LLMs do.

InShot

InShot’s AI features (like auto-cropping, background blur and voice over) are not based on LLMs, and it’s not multimodal in an AI sense. It is a basic traditional video/photo editor, works well on a phone and an iPad. It is great for using in workshops and introducing folks to an editing platform. No prompt engineering or token concepts apply. Its AI capabilities are closed-source and trained on proprietary data. It doesn’t hallucinate or employ AI agents. I’ve used it to edit all of the annimations, you didn’t think AI did all the work did you?

Runway

Runway is a creative suite that increasingly uses state-of-the-art LLMs and multimodal AI models. Prompt engineering is a core part of getting the best from its generative tools. The models handle tokens behind the scenes. Hallucinations, like odd artifacts in generated videos, can occur, especially with more ambitious prompts. Some Runway models are based on open-source projects (like Stable Diffusion), but most are closed-source and trained on internal datasets. No true AI agents yet, but the platform is moving toward more autonomous creative tools.

DALL-E

DALL-E is a multimodal AI from OpenAI that turns text prompts into images. It’s driven by a large, closed-source model trained on a vast, but not fully public, dataset of images and text. Prompt engineering hugely impacts results, subtle changes can yield wildly different images. Tokens are abstracted away, but they determine how much you can generate per request. Hallucinations are common, especially with complex or ambiguous prompts (expect weird faces, for example). DALL-E is not open-source, and doesn’t act as an AI agent; it generates on command.

Blender

Blender itself is not an AI product, but it increasingly supports plugins and add-ons that use LLMs or generative AI for texturing, animation, or scripting. Blender is fully open-source, and users can integrate open-source AI models or agents if they like. The core app is multimodal (3D, video, audio), but AI components depend on what you add. Prompt engineering and tokens only matter if you use these AI plugins. No built-in LLMs or hallucinations unless you bring your own.

Procreate

Procreate doesn’t use LLMs or prompt-based design. It’s a drawing/painting app. AI is limited to things like brush smoothing or color prediction. No multimodal AI, prompt engineering, or token concepts apply. The app is closed-source and not agentic, with minimal AI hallucination risk. Its a self contained programe so fill your boots. That said keep it updated and back up your image and brush sources because it can bugger off and wipe out years of work in a glitch… thats a bad day in the digital studio! I use it for all of my animation images.

Grammarly

Grammarly uses LLMs under the hood to assess and suggest changes to your writing. Prompt engineering isn’t exposed to users, but the way you write affects Grammarly’s suggestions. It’s text-only (unimodal) and manages tokens behind the scenes. Grammarly’s models are closed-source and trained on a proprietary mix of professional and user-submitted texts. Hallucinations can manifest as awkward or incorrect suggestions, especially for creative writing. No agents, just real-time feedback. It is as annoying AF and is the bain of my dyslexic life as it misses a lot of my devious spelling mistakes!

HyperWrite

HyperWrite is built around LLMs for writing assistance, using closed-source models trained on diverse internet and user data. Prompt engineering is important: how you ask for help changes the AI’s output. Tokens are managed in the background but influence how much text can be generated at once. HyperWrite is unimodal text only, but are exploring more agentic features. Its great for creatives to use in funding statements and stratergy planing, think of it as a creative partner rather than just a tool. It’s not open-source, but focuses on giving writers more control and creative collaboration. Hallucinations can happen, as with any LLM, but the platform is tuned to minimise obvious errors, that said as with all this tech you have to cross check everything!

Photoleap

I am using this more and more. It’s great in a workshop setting, and folks love its output. It feels like the digital Swiss Army knife for anyone who wants to transform photos on their phone without getting bogged down with complex photoediting tools. It started as a straightforward photo editor, but lately, AI has become the star of the show. The app now includes features powered by large language models (LLMs) and generative AI, like the ability to create entirely new backgrounds, swap skies, or even generate art from text prompts.

Photoleap is multimodal, letting you blend text, images, and effects. The prompt engineering aspect is subtle but real, the more creative and specific you are with your prompts or selections, the more magical and less generic your results become.

Photoleap uses a blend of proprietary and some open-source AI models. The training data isn’t public, but you can see its influence, the app is especially good at trendy or clean, but sometimes it’ll hallucinate details or give you results that feel a bit off, especially with more ambitious requests.

One thing you won’t notice as a user is the token limit or the nitty-gritty of how the AI processes your commands, since it’s all hidden behind a slick, user-friendly interface.

Photoleap isn’t an AI agent in the sense of automating tasks or making decisions for you. It has to be used as a creative partner, which I like, but newer tech creatives might not understand at first.

Most of Photoleap’s advanced features are locked behind a paywall, but for casual creators, the free version is still packed. The app is closed-source, but that’s typical for consumer-focused creative tools.

Like any AI-driven editor, its results are sometimes hit or miss, so a little trial and error goes a long way.

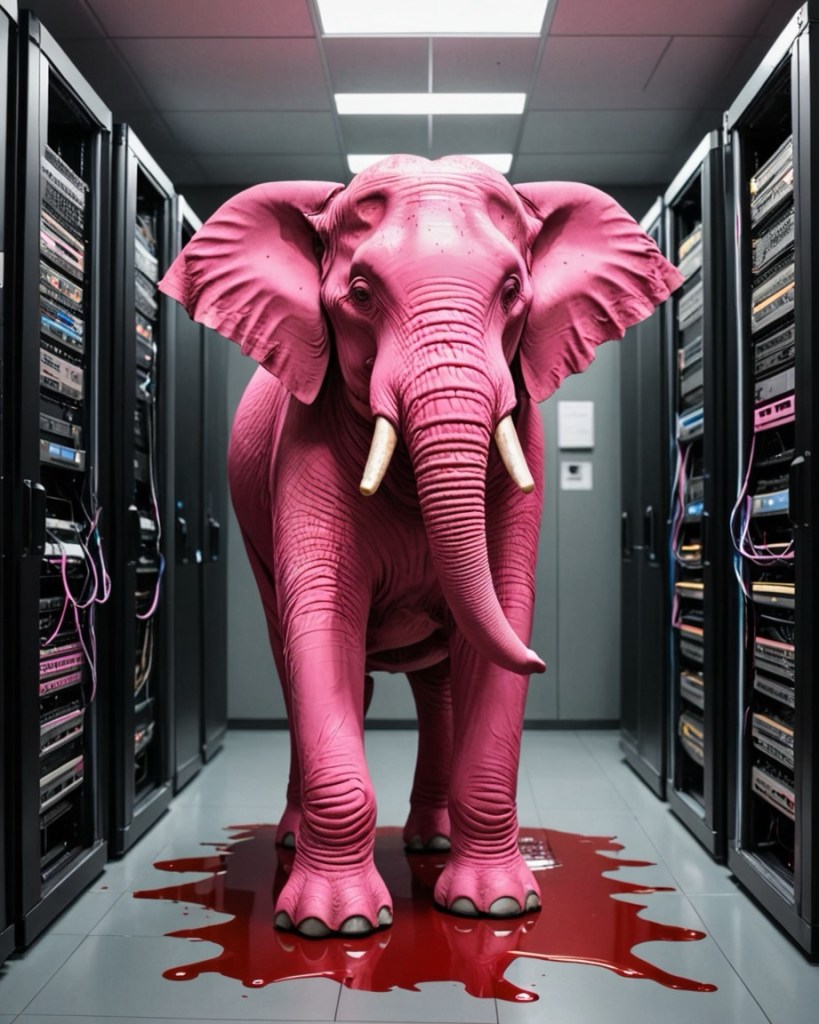

The Bloody Big AI Elephant in the Room!

Great point Mx D.P., as AI use grows we have to understand and manage its enviromental impacts. Here are an overview of the energy cost and water usage for each AI prompt:

Carbon Energy Cost per AI Prompt

Every time you send a prompt to a large language model (LLM) like GPT-4, Gemini, or similar, the model runs on massive server farms that use a significant amount of electricity. The actual energy cost of a single prompt varies depending on the model size, server efficiency, and the data center’s energy source.

- Rough Estimate:

For a single prompt to a large LLM (like GPT- 4), estimates range from about 0.001 kWh to 0.01 kWh per query.- For comparison, charging a smartphone once uses about 0.01 kWh.

- The carbon footprint of this energy depends on whether the data center uses renewable or fossil fuel energy. On coal-heavy grids, each prompt could emit roughly 1–10 grams of CO₂; on renewable grids, it’s much lower.

Water Usage per AI Prompt

Water is used in data centers primarily for cooling. When you prompt an AI, the servers heat up and need to be cooled, often via water-based systems.

- Rough Estimate:

Recent research (2023) suggests that a single prompt to GPT-4 can use about 500 milliliters (half a liter) of water for cooling, depending on the data center and local climate.- This is a hidden environmental cost that users don’t think about, but on a large scale (millions or billions of prompts), the water usage adds up quickly. Google automatically makes your search question a prompt. We are running out of water!

Why These Numbers Vary

- Model Size: Bigger models like GPT-4 use more energy and water than smaller models.

- Prompt Length: Longer, more complex prompts take more to compute, so they use more resources.

- Data Center Location: Some data centers use more sustainable energy and water-saving technologies than others.

- Infrastructure: Newer, more efficient hardware and cooling systems can reduce both carbon and water footprints.

Summary Table

MetricEstimate per Prompt (GPT- 4) Energy Usage0.001–0.01 kWhCarbon Emissions1–10 grams CO₂ (depends on energy source)Water Usage~500 mL (about half a liter)

Caveats:

- These are averages and estimates, your specific prompt might use more or less.

- OpenAI, Google, Microsoft, and others are working on making their infrastructure greener, so these numbers are likely to improve over time.

Hyperwrite, Photoleap, and Mx D.P. composed this blog post together… I wish it were an AI hallucination, but sadly, it isn’t!

Leave a comment